Imagine creating life-saving drugs faster, cheaper, and more effectively than ever before—all with the help of artificial intelligence (AI). At the heart of this revolution is a groundbreaking technology called transformers. Originally developed to power tools like Google Translate and ChatGPT, transformers have proven to be far more than language experts. Their ability to process and analyze complex patterns in data makes them perfect for tackling challenges in pharmaceutical research.

Drug design has always been a slow and expensive process, requiring years of testing and billions of dollars. Now, with transformers, scientists can accelerate this journey by generating new drug molecules, predicting how these molecules will interact with proteins in the body, and balancing multiple goals like safety and effectiveness—all before setting foot in a lab.

In this article, we’ll break down how transformers work, why they matter, and how they’re transforming the way drugs are discovered and developed. Whether you’re new to the world of AI, drug design, or both, this guide will help you understand how these technologies are bringing us closer to a healthier future.

Understanding Transformers in Drug Design

To understand how transformers are revolutionizing drug design, let’s first break down what they are and how they work. Don’t worry—it’s simpler than it sounds.

What Are Transformers?

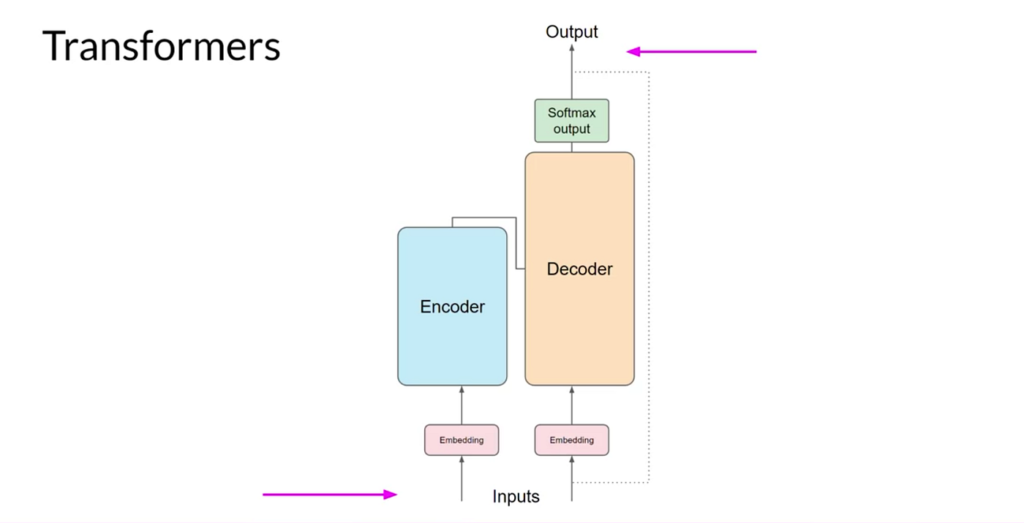

Transformers are a type of artificial intelligence (AI) model designed to process sequences of data. Imagine reading a recipe or a set of instructions: the order of the steps matters. Transformers excel at understanding sequences like this, whether they’re sentences in a language or, in our case, strings of chemical or biological data.

These models were originally developed for language tasks, such as translating text or summarizing articles. However, their ability to identify patterns and relationships within sequences has made them perfect for other complex problems, like drug design. Instead of words, transformers analyze molecules, proteins, and their interactions.

Why Are Transformers Important in Drug Design?

Drug design is all about understanding how molecules interact with biological systems to create effective treatments. Traditionally, this process relies on trial and error: scientists test hundreds or even thousands of compounds in the lab, hoping to find one that works. It’s slow, expensive, and often limited by human intuition.

Transformers change the game by doing the heavy lifting:

- Recognizing Patterns: Transformers can study vast amounts of data, such as how proteins and drugs interact, and use this knowledge to predict which molecules are likely to succeed.

- Generating New Molecules: They don’t just analyze existing data—they create entirely new drug candidates by piecing together molecules in innovative ways.

- Handling Complexity: Transformers excel at understanding complicated systems, such as how a drug might interact with multiple targets or how its structure impacts its behavior.

How Do Transformers Work?

The magic of transformers lies in their self-attention mechanism. This allows them to focus on the most important parts of a sequence while still considering the overall picture. Think of it like reading a map: you might zoom in on a particular street while still being aware of the surrounding area.

Here’s how it applies to drug design:

- Analyzing Biological Sequences: Proteins, the building blocks of life, are made up of sequences of amino acids. Transformers can analyze these sequences to predict how proteins behave or how they might interact with potential drugs.

- Mapping Chemical Structures: Using a language-like representation of molecules (e.g., SMILES), transformers understand the structure and function of chemical compounds. They can then tweak these structures to create better-performing drugs.

- Learning from Data: With access to massive datasets, transformers learn from past experiments to make smarter predictions about future drug candidates.

Why Are Transformers Better Than Traditional Methods?

Traditional drug design methods rely on techniques like rule-based models or manually-curated libraries of molecules. While useful, these approaches can’t fully capture the complexity of biology and chemistry. Transformers, on the other hand:

- Work faster by analyzing data in parallel rather than step-by-step.

- Explore a much larger chemical space, uncovering novel solutions.

- Adapt to new problems by learning directly from data, without needing hard-coded rules.

By combining their pattern recognition skills with the ability to generate new ideas, transformers are helping researchers find promising drug candidates faster and more efficiently than ever before. And this is just the beginning—later, we’ll dive into specific examples of how these models are being applied in real-world drug discovery.

Key Applications of Transformers in Drug Discovery

Transformers are reshaping drug discovery by addressing core challenges in molecular design, interaction prediction, and optimization. Below is an in-depth look at their applications, complete with detailed explanations and real-world use cases.

1. De Novo Drug Design

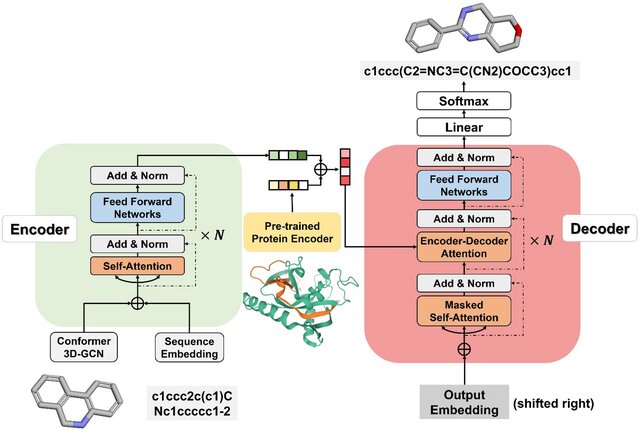

Transformers are transforming de novo drug design by creating entirely new drug-like molecules without relying on pre-existing databases. This involves framing the design process as a translation task, where a protein sequence is translated into a molecular representation like SMILES (Simplified Molecular Input Line Entry System). The transformer’s self-attention mechanism allows it to identify meaningful patterns within the protein sequence and generate novel compounds tailored to those patterns.

- Specific Use Case: In Grechishnikova’s research, a transformer model was trained to generate molecules that could potentially bind to SARS-CoV-2 targets. By analyzing viral protein sequences, the model suggested new molecules with high binding affinity and therapeutic potential.

- Details: These molecules were evaluated for structural novelty and validity. Over 90% of the generated molecules passed chemical validity checks, and the majority were unique compared to known compounds, highlighting the model’s ability to explore uncharted chemical spaces.

This application is particularly useful for diseases with limited treatment options, as it accelerates the identification of lead compounds.

2. Protein-Ligand Interaction Prediction

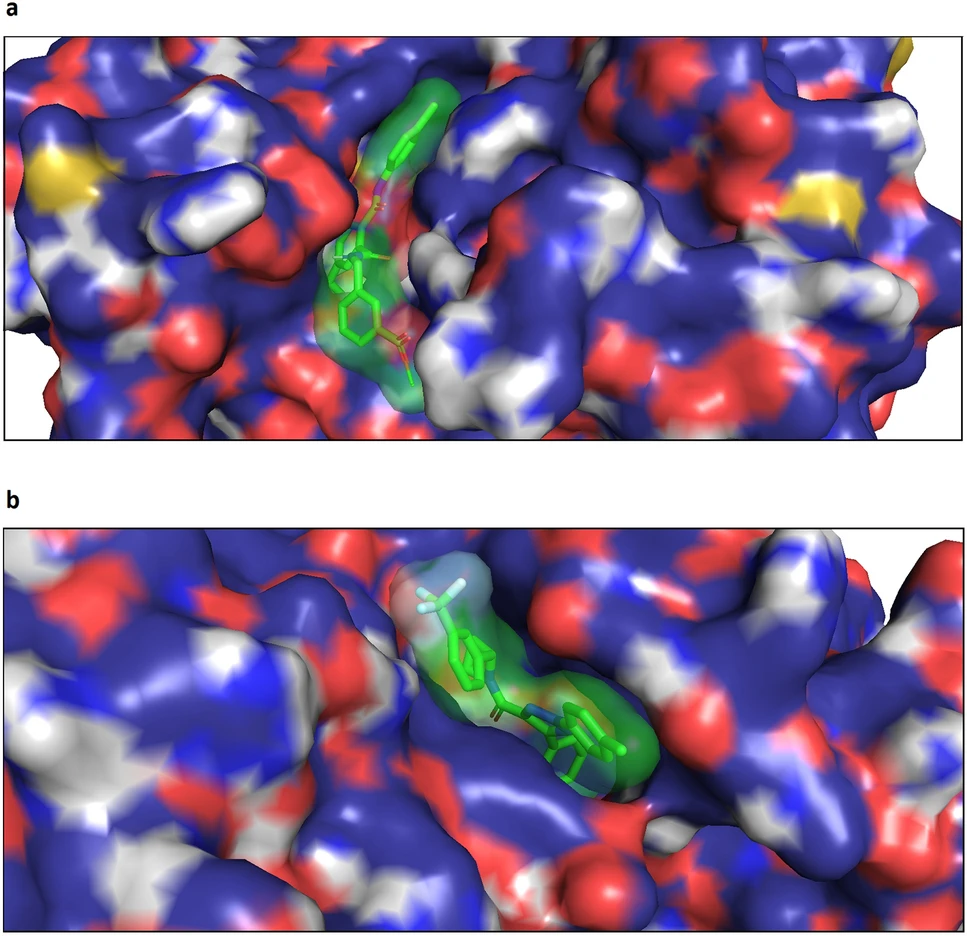

Understanding how a drug molecule (ligand) interacts with its target protein is essential for assessing its therapeutic potential. Transformers excel at analyzing these interactions by leveraging sequence data for both proteins and ligands, focusing on key regions that dictate binding.

- Specific Use Case: The Ligand-Transformer framework analyzes protein-ligand interactions by encoding the protein sequence and the ligand structure into its model. It predicts binding affinity scores, which indicate how strongly a molecule will bind to a given protein.

- Details: In one study, this approach significantly reduced the need for experimental testing by accurately identifying molecules with high binding affinities. For example, the model successfully predicted the top binding candidates for cancer-related kinases, enabling researchers to prioritize the most promising compounds for further development.

By modeling these interactions computationally, transformers save months of lab work and reduce costs associated with trial-and-error experimentation.

3. Multi-Objective Optimization for Drug Design

Designing an effective drug involves more than just ensuring it binds to its target. Drugs must also meet criteria such as safety, stability, and bioavailability. Transformers, when paired with optimization frameworks, can handle these multi-objective challenges by analyzing multiple properties simultaneously.

- Specific Use Case: A study by Aksamit et al. integrated a transformer model with many-objective optimization algorithms to evaluate and optimize drug candidates. The transformer generated molecules, and the optimization algorithm scored them based on criteria like ADMET (Absorption, Distribution, Metabolism, Excretion, and Toxicity) properties.

- Details: In this framework, over 10,000 molecules were evaluated. The model successfully identified compounds that not only showed strong binding but also demonstrated favorable ADMET properties, such as low toxicity and high solubility. This dual approach ensures that drug candidates are both effective and safe for further development.

Multi-objective optimization with transformers reduces late-stage failures, saving significant resources during clinical trials.

4. Virtual Screening and Compound Library Expansion

Virtual screening involves analyzing large libraries of chemical compounds to identify potential drug candidates. Transformers enhance this process by efficiently sifting through massive datasets and predicting which molecules are most likely to succeed.

- Specific Use Case: In one application, transformers were used to screen a library of over a billion molecules against targets involved in Alzheimer’s disease. The model predicted binding affinities and shortlisted a manageable number of candidates for experimental validation.

- Details: The transformer processed the data in parallel, dramatically reducing screening times. It prioritized compounds with high binding scores and desirable chemical properties, narrowing the pool from a billion to a few hundred high-potential candidates.

This application highlights how transformers reduce the workload for researchers while maintaining high precision in identifying lead compounds.

5. Property Prediction and Molecule Refinement

Before a drug reaches clinical trials, its properties—such as solubility, toxicity, and metabolic stability—must be optimized. Transformers predict these properties by analyzing molecular structures and identifying features that influence performance.

- Specific Use Case: Researchers used a transformer to predict toxicity levels in candidate drugs for cardiovascular conditions. By examining molecular features, the model identified potential toxicity risks and suggested modifications to improve safety.

- Details: The predictions were validated with existing datasets, showing over 85% accuracy in identifying high-toxicity compounds. The model also proposed safer molecular alternatives, allowing researchers to refine candidates before preclinical testing.

This predictive capability helps avoid costly failures in the later stages of drug development.

6. Target-Specific Drug Development

Transformers enable the development of drugs tailored to specific biological targets, even in cases where only sequence data (not 3D structural data) is available. By focusing on the target’s sequence, transformers can design molecules optimized for precise interactions.

- Specific Use Case: A transformer model was applied to generate inhibitors for a specific protein involved in breast cancer. Despite the lack of detailed structural data, the model generated molecules with high binding affinities, validated through computational docking studies.

- Details: The model used sequence data to identify regions of the protein critical for binding. Generated molecules showed competitive efficacy compared to existing drugs, demonstrating the model’s utility in addressing unmet medical needs.

This application is particularly useful for emerging diseases or rare conditions where traditional methods lack sufficient data.

These applications demonstrate how transformers are making drug discovery faster, more precise, and cost-effective. By generating new molecules, predicting interactions, and optimizing multiple drug properties, transformers are streamlining workflows and enabling researchers to tackle complex pharmaceutical challenges with greater efficiency.

Benefits of Using Transformers in Drug Design

Transformers have become a cornerstone of modern drug discovery, offering several advantages over traditional approaches and older AI models. These benefits range from improved efficiency to greater adaptability, making transformers an indispensable tool for pharmaceutical researchers.

1. Enhanced Efficiency in Data Processing

Transformers are designed to process large amounts of data simultaneously, rather than step by step, which is a significant improvement over traditional models like recurrent neural networks (RNNs). This parallel processing capability reduces computation time and accelerates drug discovery workflows.

- Example: During virtual screening, transformers can analyze millions of molecular sequences in a fraction of the time it would take conventional models. For instance, screening libraries of compounds for Alzheimer’s disease candidates took days instead of months when powered by transformers.

2. Ability to Handle Complex Biological and Chemical Data

Biological sequences (like proteins) and chemical structures (like molecules) are highly complex, with intricate patterns and long-range dependencies. Transformers excel at recognizing these patterns using their self-attention mechanism, which allows them to focus on relevant parts of the sequence while considering the whole.

- Why It Matters: Understanding long-range dependencies is crucial for tasks like predicting protein-ligand interactions or generating molecules that meet specific therapeutic needs.

3. Versatility Across Drug Discovery Stages

Transformers are not limited to a single application—they can be used throughout the drug discovery process. From designing new molecules and predicting their properties to optimizing their safety and efficacy, transformers provide a unified framework for tackling diverse challenges.

- Example: A transformer trained to generate new molecules can also predict their toxicity levels, offering an end-to-end solution for early-stage drug development.

4. Scalability for Large and Diverse Datasets

Transformers perform well with large and diverse datasets, allowing researchers to train models on comprehensive biological and chemical libraries. This scalability is essential for exploring vast chemical spaces and identifying innovative solutions.

- Why It Matters: Drug discovery often requires analyzing millions of data points, such as chemical properties, biological interactions, and clinical trial outcomes. Transformers streamline this process by handling large-scale data efficiently.

5. Improved Prediction Accuracy

The self-attention mechanism used by transformers improves the accuracy of predictions, particularly for complex tasks like protein-ligand interaction modeling and ADMET property prediction. By focusing on the most relevant data, transformers reduce errors and produce more reliable outputs.

Overcoming Challenges in Transformer-Based Drug Design

While transformers offer immense potential, their application in drug discovery comes with specific challenges. Addressing these hurdles is crucial to fully realizing their benefits.

1. High Computational Requirements

Training transformer models requires significant computational power due to their large number of parameters. This can limit their accessibility, especially for smaller research teams or organizations with limited resources.

- Solutions: Advances in hardware (e.g., GPUs and TPUs) and techniques like model pruning, quantization, and distributed training are helping reduce computational demands. Pre-trained models, which can be fine-tuned for specific tasks, also offer a more resource-efficient alternative.

2. Data Limitations

Transformers rely on high-quality, diverse datasets for training. In drug discovery, such datasets are often scarce, particularly for rare diseases or novel targets. Additionally, biases in available data can lead to suboptimal model performance.

- Solutions: Techniques like data augmentation, synthetic data generation, and transfer learning can mitigate these issues. For instance, augmenting datasets with simulated molecular interactions allows models to learn from a broader range of scenarios.

3. Model Interpretability

Transformers are often criticized for being “black box” models, meaning their decision-making processes are difficult to interpret. This lack of transparency can be a barrier to adoption, particularly in highly regulated fields like drug development.

- Solutions: Explainable AI (XAI) techniques are being developed to make transformer predictions more interpretable. These methods provide insights into why a model made a particular prediction, improving trust and facilitating regulatory approval.

4. Integrating Biological and Chemical Knowledge

While transformers are excellent at learning patterns from data, they often lack domain-specific insights. Without integrating biological and chemical knowledge, their predictions can miss critical context.

- Solutions: Hybrid models that combine transformers with rule-based systems or graph neural networks (GNNs) are emerging as a solution. These models leverage the strengths of transformers while incorporating domain expertise to enhance accuracy.

5. Balancing Generalization and Specialization

Transformers trained on specific tasks may not perform well when applied to new problems, and overly generalized models may lack the precision needed for specialized tasks.

- Solutions: Modular transformer architectures allow researchers to fine-tune parts of a model for specialized tasks while retaining generalizable features. This balance ensures the model performs well across diverse applications.

The Future of Transformers in Drug Discovery

As the capabilities of transformers continue to evolve, their role in drug discovery is expected to expand even further. Advances in computing power, algorithm development, and data availability will enable transformers to tackle even more complex challenges in pharmaceutical research.

1. Personalized Medicine with Transformers

Transformers are poised to revolutionize personalized medicine by designing drugs tailored to individual genetic profiles. By analyzing patient-specific genomic data, transformers can predict which drug compounds will be most effective for a particular individual, minimizing trial-and-error approaches.

- Example: A transformer could design a cancer treatment specifically targeted at a patient’s unique genetic mutations, improving therapeutic outcomes while reducing side effects.

2. Integration with Multi-Modal Data

The future of drug discovery lies in integrating diverse data types, such as genetic sequences, 3D protein structures, clinical outcomes, and environmental factors. Transformers, when combined with multi-modal learning frameworks, will have the ability to process and learn from these diverse datasets.

- Impact: This integration will enhance predictions of drug efficacy and safety, providing a more holistic view of potential treatments.

3. Hybrid Models for Enhanced Performance

Researchers are already experimenting with combining transformers with other AI architectures, such as graph neural networks (GNNs) and diffusion models. These hybrid models can leverage the sequence-processing power of transformers while incorporating spatial and relational information from GNNs.

- Why It Matters: Hybrid models will allow transformers to better model complex molecular interactions, such as protein-ligand docking, and make more accurate predictions for 3D molecular structures.

4. Expanding Drug Discovery Pipelines

Transformers are expected to play a larger role in automating entire drug discovery pipelines, from molecule generation to clinical trial design. With advances in automation, researchers can significantly reduce the time and cost associated with developing new therapies.

5. Collaboration Across Disciplines

As transformers become more accessible, collaborations between AI researchers, chemists, biologists, and clinicians will grow. These interdisciplinary efforts will help refine transformer models, integrate domain knowledge, and improve real-world applications in drug development.

FAQs: Common Questions About Transformers in Drug Design

- What are transformers, and how do they work in drug design?

Transformers are advanced AI models that process sequences of data, such as amino acid chains or molecular structures. They use a self-attention mechanism to identify important patterns and relationships, making them ideal for tasks like molecule generation and protein-ligand interaction prediction. - Can transformers create new drug molecules?

Yes, transformers can generate new drug molecules by analyzing patterns in existing data and designing novel compounds with specific properties. This process, known as de novo drug design, is faster and more efficient than traditional methods. - How do transformers predict protein-ligand interactions?

Transformers analyze the sequences of proteins and ligands, focusing on regions most likely to interact. They predict binding affinities and suggest potential drug candidates, reducing the need for extensive experimental testing. - What challenges do transformers face in drug discovery?

Key challenges include high computational requirements, limited availability of diverse and high-quality datasets, and the lack of interpretability in their predictions. Efforts to address these challenges include data augmentation, explainable AI, and hybrid models. - Are transformers being used in real-world drug discovery?

Yes, transformers are already being used in research and development to generate molecules, predict interactions, and optimize drug properties. Their applications have accelerated timelines and reduced costs in several drug discovery projects. - What’s next for transformers in drug design?

The future includes more personalized medicine, multi-modal data integration, hybrid AI models, and fully automated drug discovery pipelines. These advancements will make drug development faster, cheaper, and more precise.

Conclusion: The Transformative Role of Transformers in Drug Discovery

Transformers have reshaped how we approach drug discovery, offering unprecedented capabilities in designing new molecules, predicting protein-ligand interactions, and optimizing drug properties. By processing complex biological and chemical data with remarkable efficiency, transformers are accelerating timelines, reducing costs, and pushing the boundaries of what’s possible in pharmaceutical research.

However, as transformative as they are, transformers are not the final destination. The field of AI is evolving rapidly, and it’s only a matter of time before even more sophisticated architectures emerge. These future innovations may integrate advanced reasoning, multi-modal learning, and real-time adaptability, further revolutionizing the way we develop life-saving treatments.

For now, transformers remain a cornerstone of innovation, empowering researchers to tackle complex challenges and uncover new possibilities in drug design. Whether you’re an AI enthusiast, a pharmaceutical researcher, or someone curious about the future of medicine, transformers are a glimpse into a world where technology and biology converge to improve human health.

References:

- Grechishnikova, D. Transformer neural network for protein-specific de novo drug generation as a machine translation problem. Sci Rep 11, 321 (2021). https://doi.org/10.1038/s41598-020-79682-4

- Aksamit, N., Hou, J., Li, Y. et al. Integrating transformers and many-objective optimization for drug design. BMC Bioinformatics 25, 208 (2024). https://doi.org/10.1186/s12859-024-05822-6

- Qi X, Zhao Y, Qi Z, Hou S, Chen J. Machine Learning Empowering Drug Discovery: Applications, Opportunities and Challenges. Molecules. 2024 Feb 18;29(4):903. doi: 10.3390/molecules29040903. PMID: 38398653; PMCID: PMC10892089.